Usually one must hard-code attribute locations and bind them to names, or, going in the opposite direction, hard-code names and bind them to locations. Most engines I see handle their vertex attributes by having explicit naming conventions, which seems primitive by comparison and I’m wondering if that’s the superior approach at the end of the day for some reason. I haven’t read anything on the internet about this, save a couple of stackoverflow questions, and I was wondering if there’s a significant drawback to this type of programmatic approach? If nobody has done it, surely there’s a reason why, or if there’s another approach that’s more popular then that would explain it. With the shader source in hand, you would never have to manually configure attributes or hardcode any names or locations again.

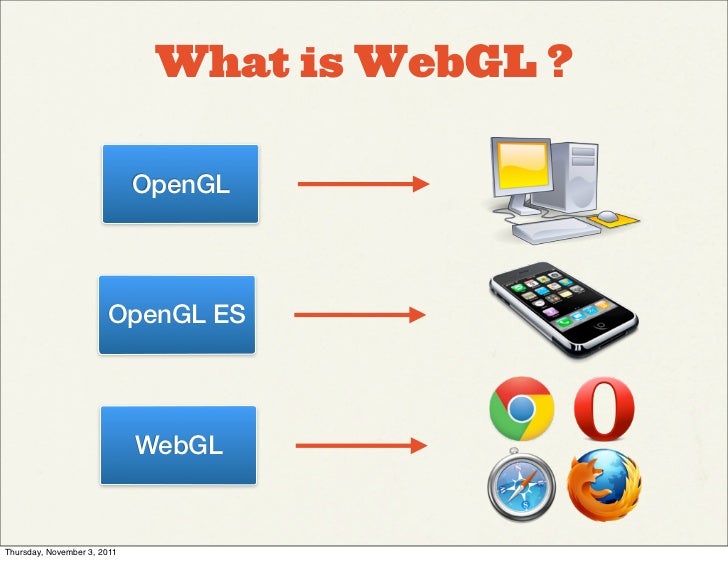

You could even implement some kind of runtime type checking system. They return all the information you need to automatically (or at least programmatically) iterate through and, in conjunction with glGetUniformLocation and glGetAttribLocation, create a mapping for all your glUniform* and glVertexAttribPointer calls for a particular program, and once you have created that mapping, you simply proceed to upload your data and draw. Namely, glGetActiveAttrib and glGetActiveUniform seem like immensely powerful functions. I was reading through the OpenGL ES 2.0 specification, since I’m in the ideas stage of writing a webgl renderer, and I came across the introspection API.

0 kommentar(er)

0 kommentar(er)